call us at: (248)602-2682 OR Schedule a time to meet with an advisor: Sonareon Schedule

Attend our upcoming Workshop March 26 either at 10AM or 1PM. Register today - $99. Get free AI Assessment.

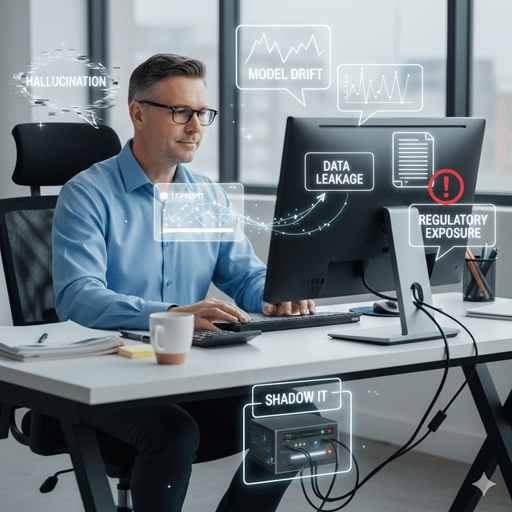

5 AI Risks CPA Firms Miss

Remember how The Sixth Sense hid its twist in plain view? These 5 AI risks are doing the same thing to CPA firms and most won't see them coming until it's too late.

Peter Serzo

1/20/20263 min read

In the movie, Sixth Sense, nothing is hidden. The director points to the ending by meticulously building dozens of tiny details no one notices until the very end. Artificial intelligence is transforming the accounting world (and most industries) in much the same way. Firms are turning a blind eye as AI is becoming a silent partner in day‑to‑day operations. The hypocrisy is firms are quick to embrace efficiency but many overlook the dozens of details in plain sight. Here are five AI risks most firms miss and how to address them

1. Shadow AI: Staff Using Tools the Firm Never Approved

Employees are utilizing with AI tools on their own like ChatGPT, Gemini, Copilot, Perplexity. Often without realizing they may be exposing client data in the process. This “shadow AI” problem is exploding across professional services.

Why it’s dangerous

• Client data may be uploaded into tools with unclear privacy policies

• No audit trail exists for how outputs were generated

• Firms lose control over data governance and security

What to do instead

Create an approved AI tool list, define “safe use” rules, and train staff on what not to paste into public models. This should be part of your WISP (Written Information Security Program)

2. Hallucinated Facts That Slip Into Client Deliverables

AI is confident and polished and can be completely wrong. AI is that loud know it all in the office. The output reads with authority but can be wrong and CPAs can completely miss this in their "haste" to produce. This opens the firm up to liability.

Where this shows up

• Tax memos citing nonexistent IRS rulings

• Financial statement drafts with incorrect calculations

• Advisory insights based on fabricated data

What to do

Human in the loop which is a popular AI mantra. Mitigation requires human review, verification, and a documented “AI‑assisted” workflow.

3. Data Leakage Through Prompting

Prompts are another term for putting in your search criteria (Extreme simplification here!). Except with models like ChatGPT, Gemini and others they do a lot more than search. They create from your input. Even when employees think they’re being careful, sensitive information can leak through prompts:

• Client names

• EINs

• Financial details

• Internal methodologies

• Proprietary templates

Why it’s dangerous

Using Copilot or Gemini feels like one is doing a simple search and getting better results. However, once entered into a public model, that data may be retained, logged, or used to train future systems depending on the provider. This happens with the "free" versions of the public models. The models are a LOT more than search.

What to do

AI models like Gemini, ChatGPT, and others should have a defined usage pattern. There should be training in place. Solutions that need something stronger from the model should be using enterprise‑grade AI with contractual data protections and enforce “no client identifiers” in prompts. Not all employees should have this.

4. Model Drift That Quietly Changes Output Quality

AI models evolve constantly. A prompt that worked flawlessly in March may produce different results in July. If a firm is using agentic AI this becomes troublesome if the owner did not take this into account.

Why this matters for CPA firms

• Audit procedures may become inconsistent

• Tax research summaries may shift in tone or accuracy

• Advisory insights may vary across engagements

This creates a compliance nightmare: the same workflow can produce different results over time without any change in staff behavior.

What to do

Fix Document prompts, Agentic AI workflows, and periodically re‑validate outputs. This should be part of the WISP.

5.Regulatory Exposure due to Lack of AI Governance

Regulators are paying attention. CPA firms are not ready. Emerging rules from the FTC, state privacy laws, and professional standards increasingly expect firms to:

• Disclose AI use

• Protect client data

• Maintain audit trails

• Demonstrate human oversight

What to do

Governance is key. Here are the essentials to be expanded upon in another blog post.

• An AI usage policy

• A risk assessment framework

• Clear approval processes

• Documentation of AI‑assisted work

These aren’t sci‑fi risks or abstract “what‑ifs.” They’re practical, operational, and regulatory blind spots that can expose a CPA firm to compliance failures, reputational damage, and even legal liability. AI is not just a productivity tool, it is a new operational layer that touches client data, professional judgment, and regulatory obligations. Firms that ignore these risks are setting themselves up for preventable problems

Use your sixth sense and address these proactively and you will not find yourself looking at a ring that dropped to the floor.

Connect

Email: info@sonareon.com

© 2025 Sonareon. All rights reserved.

Phone: (248-602-2682

Schedule a time to meet with an advisor: Sonareon Schedule

Phone: 248-429-9110