call us at: (248)602-2682 OR Schedule a time to meet with an advisor: Sonareon Schedule

Attend our upcoming Workshop March 26 either at 10AM or 1PM. Register today - $99. Get free AI Assessment.

Report Card: Which AI Giants truly honor your customers Data Privacy

We evaluated the privacy practices of leading LLMs like GPT, Gemini, Claude, and Grok. We break down who keeps what and how to opt out of having them train on your customers data to reveal which models truly safeguard your information—and which leave you exposed.

Peter Serzo

11/9/20252 min read

You may be an accounting firm or a Tax preparer or work in the health field, or you work with customer data in some fashion. You need to protect that data. One of the key benchmarks to know when deciding on what tool to use and when is: WHAT IS THIS TOOL DOING WITH THE DATA i PUT INTO IT? Is it using this data to train the model?

Before we look at the comparisons let me preface by saying do not ever put any PII, PCI (Credit Card Numbers), or PHI information into these models. While I have researched and present what they do with the data, the fact of the matter is you are using an outside application that you have no control or visibility to what happens when you provide data.

One other note: These are the free versions that you use, NOT the paid versions which absolutely offer additional protections.

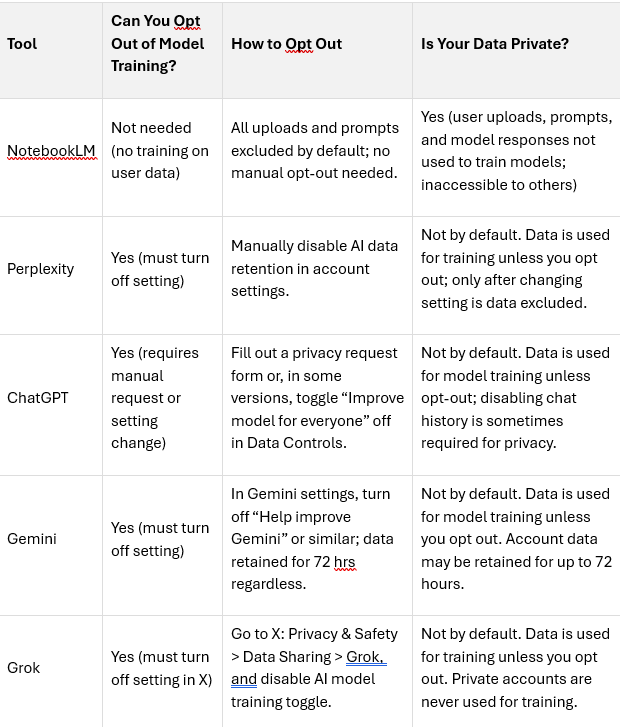

Here is a comparison of the major free AI assistants—NotebookLM, Perplexity, ChatGPT, Gemini, and Grok—focused on privacy, data training policies, and opt-out mechanisms for users on their free versions.

Key Points

NotebookLM stands out for not using any uploads, prompts, or results for model training—no user action needed for privacy. I LOVE this tool and use it for a lot.

Perplexity, ChatGPT, Gemini, and Grok all require users to manually opt out if they don’t want their chats used to improve models. The default in free versions is data is used for training.

The opt-out is effective from the point it’s enabled; prior interactions may already have been used for training unless deleted according to each service’s data retention policies.

Privacy is not absolute: even after opting out, brief retention windows or human review for abuse prevention can apply (e.g., Gemini holds prompts for 72 hours).

Technical Stack

This is my personal technical stack in order of importance:

NotebookLM - this is very effective because I can fence in the data with ONLY the sources I upload into the application.

Perplexity - There is a reference for everything mentioned so I can track and double-check veracity

Copilot/ChatGPT

Connect

Email: info@sonareon.com

© 2025 Sonareon. All rights reserved.

Phone: (248-602-2682

Schedule a time to meet with an advisor: Sonareon Schedule

Phone: 248-429-9110